Idea of Superposition

Let's start with a question, "Can I be in a different position at the same time ?"

Obviously - No.

Ok, let's ask the question more specifically, "Can I be in a mood of happy and sad at the same time ?"

🤔🤔🤔

Well, we can say many times in life we are not actually happy or sad, if we try to measure on a scale starting 1 with sadness and 10 with happiness, we may be in between 1 and 10.

Let at 1 sad, 2 - 4 is more likely sad, 5,6 neither happy nor sad, 7 - 9 is more likely happy and at 10 is happy.

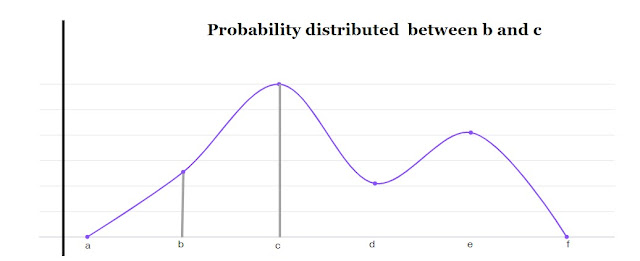

Have a look at the following table and graph -

(Scores actually represent inner psychological feelings)

So for the whole day, since the average of all scores is 7, we can say more likely happy is the experimented mood, but if we see the data locally it does not say so, it can say we are in some ups and down combination of happy and sad (like a wave spread in a whole day).

Two clever observations I would like to mention here,

1. As I mentioned earlier, although I am expected to be more likely happy, inner feelings do not say so. The graph actually shows the whole day's ups and downs in mood.

2. I will know at a particular time happy or sad if I measure so, think you are doing this experiment with someone else, you will learn about that person at a particular time if you measure that time, otherwise, you have to take that average position came out finally or we can say it will be identified if you measure. At a particular time mood is at a fixed position.

So we can clearly see two kinds of behaviour one is experimentation (that we get from data) and another is observation (observing locally). It's not that in our experiment something is wrong, it is that nature works like that.

Scientists called such phenomena superposition.

Superposition word can be split into two words - super and position, in Latin super, means "over" or "above" and position means "placement", so in together the term suggests that, "Something is in superposition" refers that "they are stacked or placed on top of each other".

In the early days principle of superpositions was used to describe how a vibrating system's motion is the sum of its proper vibrations.

But in 1801, English physicist Thomas Young totally changed its use by his famous "Young's Double-Slit experiment".

Double-Slit experiment - You can check in books or on the internet about this experiment.

Simply say that experiment is used to understand how electrons can act like waves and create interference patterns.

So then after scientists define superposition as the ability of a quantum system, it describes how a physical system can exist in multiple states until it's measured.

This principle is often described by a beautiful experiment called "Schrödinger's Cat experiment", a thought experiment where a cat is placed in a sealed box with a sleeping pill mixed in cat food, so the possibilities are the cat eat the food or not, i.e, the cat could be both in sleep and awake until we open the box and check. So once we open the box we will get one position only.

So experiments and observations give us two different behaviours.

Now I think you are in a superposition of convince or not.

In the last blogs, I discuss probability distributions and the development of linear algebra. there I described with real-life examples how both theories developed and today become the most important subjects in science.

You can read them by clicking on that link.

1. What is the probability you will open this blog?

2. The Axioms - Probability

3. Probability Distribution

4. Linear Algebra - Purpose to Application

5. The development of Linear Algebra

So now the question you have in mind may be why or how Probability and Linear Algebra are important in our context. Let's discuss that.

For the case of probability, you see that I mention in the above graph that whole-day mood is distributed probabilistically in a whole day. So probability has a huge role in giving expected value.

But for the case of linear algebra its a little deeper.

In linear algebra we mostly discuss vector and vector space, in the last blog " The Development of Linear Algebra " I discuss that.

The theory of vector space or say linear algebra gives us two important concepts -

a) Linear Combination of vectors and b) Basis of a vector space.

a) Linear Combination of vectors - Any vector can be written as either sum or constant multiple or together combinations of sum and constant multiple.

Example - Let is our real vector space with usual summation and constant multiplication over . (, ,+,*,(+),(.))So, c ∈ we can see as c = c(.)1; c = (2*c)(.)(c/2) or many ways, where in left-hand side c is a vector in , and right-hand side c, 2c in the scalar field , and 1,c/2 are vector in .

So, we can write any v as v = c1 x1+ c2 x2 +

... + cn xn

b) Basis of a vector space - It's a collection of vectors (a set) say A in vector space V if satisfies two properties - A={x1,x2,....,xn}

i) The set A is linearly Independent, i.e., if c1 x1+ c2 x2 + ... + cn xn= Θ, then c1 = c2 = .... = cn = 0.

ii) V is generated by A, i.e., for any v ∈ V can be written as v = c1 x1+ c2 x2 +

... + cn xn.

Then we called A is a basis of V.

So intuitively we can say for any vector space if we know the basis, we actually know its building block structure, which gives us many different kinds of information about the vector space.

Now consider a special kind of vector space called Hilbert space.

A Hilbert space H is a complete inner product space, meaning it is a vector space equipped with an inner product that allows for the measurement of angles and lengths (norms), and every Cauchy sequence in the space converges to a point within the space.

Hilbert spaces generalize the concept of Euclidean spaces to infinite dimensions and are essential in the mathematical formulation of quantum mechanics.

So the linear combination of vectors

where c1,c2, .... ,cn are scalars (which can be real or complex numbers), and ψ is a vector in the Hilbert space ψ is a vector in the Hilbert space H.

In the context of quantum mechanics, these vectors ψ1,ψ2, .... ,ψn might represent quantum states, and the scalars c1,c2, .... ,cn represent probability amplitudes.

In a Hilbert space, the inner product and provides a measure of their "overlap" or "similarity".

If , then and are orthogonal, meaning they are independent in the sense of having no component in common.

Just as in finite-dimensional vector spaces, a Hilbert space can have a basis. However, in an infinite-dimensional Hilbert space, this basis is typically an orthonormal basis, which consists of vectors such that:

Any vector in the Hilbert space can be expressed as a (possibly infinite) linear combination of these basis vectors:

where are the coefficients (which could be seen as projections of onto the basis vectors ).

👍🏻

ReplyDelete